How Engineering Leaders Use Oobeya to Understand Real Productivity, Quality, and ROI

AI-powered coding assistants such as GitHub Copilot, Cursor, Windsurf, and Claude are rapidly transforming the way software is built. But despite the industry hype, most engineering leaders still struggle with one essential question:

How do we measure the real impact of AI-assisted development across teams, delivery pipelines, and business outcomes?

Many organizations currently rely on surface-level indicators:

- Developer surveys and anecdotal productivity claims

- IDE-level suggestions and acceptance metrics

- Local scripts with limited visibility

- Incomplete license usage stats

These metrics fail to answer the deeper, strategic organizational questions that drive investment decisions:

- Is AI actually improving end-to-end delivery and quality?

- How does AI affect crucial metrics like Cycle Time, rework, or review load?

- Are we overpaying for underused licenses? (What is the true ROI?)

- Where do teams need coaching, governance, or targeted training?

Oobeya’s AI Coding Assistant Impact framework addresses these gaps by providing a comprehensive, SDLC-wide approach to measuring AI adoption and its effects. This framework is fully supported by the Oobeya platform’s dedicated AI Impact module.

1. Visibility Layer: Understand Adoption and Engagement

Before analyzing organizational outcomes, leaders must establish clear, continuous visibility into who is using the AI assistants and how often.

Oobeya provides this through Copilot Engagement & Acceptance Trends, which offer concrete insights:

- Active Users: Users who have coding assistant (Copilot) installed and interacted with it.

- Engaged Users: Users who accepted at least one coding assistant suggestion. (indicating successful integration into their workflow).

- Adoption Rate: The ratio of engaged users to active users. And the percentage of the engineering organization actively benefiting from AI-assisted development.

- Code Acceptance Ratio: The percentage of AI-generated suggestions accepted.

This initial layer pinpoints successful adopters, highlights teams with low or inconsistent use, and quickly identifies underutilized licenses. This answers the foundational question: Are people actually using the AI coding assistants?

Crucially, usage alone does not equal impact.

2. Productivity Impact Layer: Measure Meaningful Output Changes

The next step is to link usage to real engineering outcomes. Oobeya analyzes how AI-generated contributions flow through the SDLC.

Key metrics for measuring productivity change include:

- Coding Impact Score: A sophisticated performance indicator based on code contribution patterns, ownership, complexity, and structural analysis.

- Coding Efficiency Change: Shows whether developers produce meaningful code more efficiently when assisted by AI. Oobeya compares teams with AI versus without AI.

The platform provides granular AI-assisted contribution detection, identifying AI-generated code blocks, multi-line suggestions, repeated patterns, and structural similarity with AI-generated code. This layer shows how AI affects real throughput and value creation—not just volume.

3. Quality Impact Layer: Ensure AI Does Not Introduce Hidden Risks

Increased output is only beneficial when code quality remains stable or improves. Unchecked AI assistance can inadvertently introduce debt or vulnerabilities.

Through integrations with static analysis tools like SonarQube, test reporting systems, and CI/CD pipelines, Oobeya measures:

- AI vs. Non-AI Code Quality: Tracking SonarQube bugs, vulnerabilities, code smells, and technical debt patterns introduced by AI-assisted code. See how it integrates: Oobeya + SonarQube Integration

- Rework & Review Rejections: Shows whether AI suggestions create additional, unnecessary review loops, increasing reviewer burden.

- Test Coverage Impact: Analyzes whether AI-generated tests improve quality or introduce brittleness.

This layer answers the crucial question:

Is AI helping us deliver better quality, or is it creating new risks and increasing long-term debt?

4. Delivery & Flow Layer: Evaluate Software Delivery Impact End-to-End

Unlike local IDE or commit-level tools, Oobeya provides a full SDLC view across Jira, Azure Boards, GitHub, GitLab, SonarQube, and CI/CD systems.

This holistic analysis evaluates how AI usage affects the entire flow of value:

- Lead Time for Changes: Do AI-assisted developers deliver user stories faster from inception to production?

- Cycle Time Breakdown: Granular insights into coding, review, merge, and deployment phases to pinpoint exactly where AI acceleration occurs.

- Review Workload: Does AI increase or decrease the overall reviewer burden?

- Flow Efficiency: Evaluates whether AI reduces wait time or accelerates active work progress.

This comprehensive view confirms:

Is AI improving delivery performance, not just local coding speed?

5. Developer Experience (DX) Layer: Understand the Human Impact

AI adoption fundamentally changes how developers work, think, and collaborate. A sustainable AI strategy must prioritize the human element.

Oobeya provides DX signals by correlating AI usage with:

- Cognitive load indicators

- Work intensity patterns and review responsibilities

- Context switching frequency

- Frustration signals (e.g., excessive rework or abandoned changes)

This layer highlights healthy usage patterns, flags overuse or dependency risks, identifies craftsmanship decline signals, and indicates where targeted coaching is required. This completes the human perspective on responsible AI adoption.

6. Organizational ROI Layer: Quantify the Business Value

Engineering leaders must justify AI-related investments with quantifiable data. The final layer provides metrics that transform AI investment decisions from subjective judgment to data-backed strategy:

- License Utilization: Ensures AI licenses match real organizational needs, optimizing cost.

- Output per License Cost: Analyzes the value created per user, providing a direct efficiency metric.

- Delivery Cost Reduction: Shorter cycle times and lower rework translate into measurable, auditable savings.

- Team Benchmarking: Identifies and celebrates high-performing, AI-effective teams for knowledge sharing.

This layer provides the definitive answer:

Is the investment in AI coding assistants worth the organizational cost?

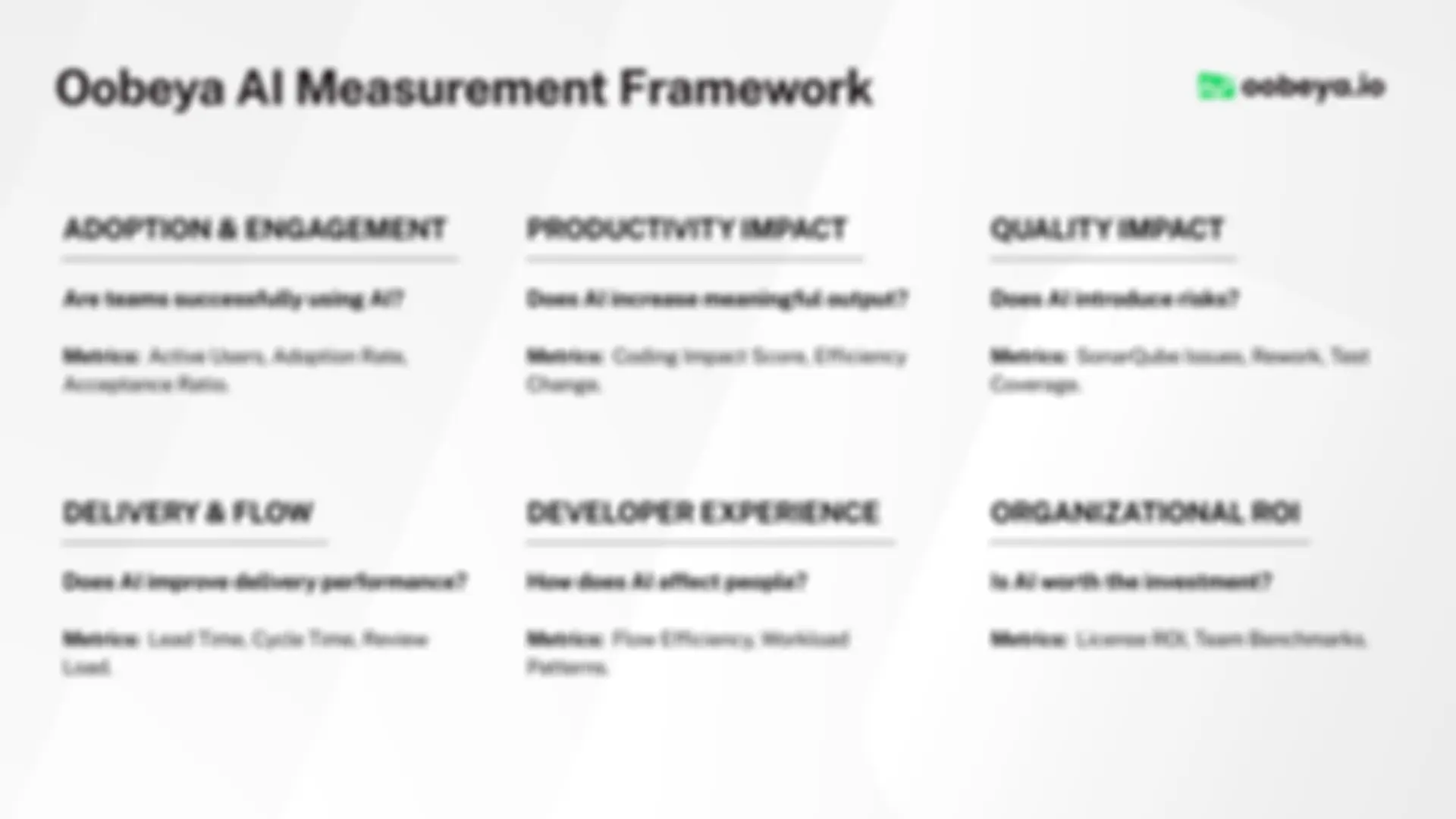

Summary: The Oobeya AI Measurement Framework

| Layer | Key Question | Example Metrics |

|---|---|---|

| Adoption & Engagement | Are teams successfully using AI? | Active Users, Adoption Rate, Acceptance Ratio |

| Productivity Impact | Does AI increase meaningful output? | Coding Impact Score, Efficiency Change |

| Quality Impact | Does AI introduce risks? | SonarQube Issues, Rework, Test Coverage |

| Delivery & Flow | Does AI improve delivery performance? | Lead Time, Cycle Time, Review Load |

| Developer Experience | How does AI affect people? | Flow Efficiency, Workload Patterns |

| Organizational ROI | Is AI worth the investment? | License ROI, Team Benchmarks |

How to Start Measuring AI Impact with Oobeya

You can begin today by activating Oobeya’s AI Coding Assistant Impact module. It provides the end-to-end visibility required for strategic AI governance:

- Organization-wide AI usage visibility

- Productivity and quality comparisons

- Team-level benchmarks

- ROI signals for strategic planning.

Ready to move beyond anecdotal evidence? Book a demo to see how Oobeya transforms your AI investment into measurable value.

Written by Emre Dündar

Emre Dundar is the Co-Founder & Chief Product Officer of Oobeya. Before starting Oobeya, he worked as a DevOps and Release Manager at Isbank and Ericsson. He later transitioned to consulting, focusing on SDLC, DevOps, and code quality. Since 2018, he has been dedicated to building Oobeya, helping engineering leaders improve productivity and quality.